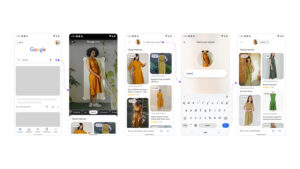

Google has recently introduced a new multisearch feature to its official app on Android and iOS. This new approach, which is added to the Google Lens function, provides you with the advantage of using both of its text and reverse image search engines together, enabling you to further refine your searches.

“With multisearch, you can ask a question about an object in front of you or refine your search by colour, brand or a visual attribute,” Google wrote on its official blog. It added that this is made possible by its latest advancements in artificial intelligence, with plans to explore ways on enhancing the feature further by utilising its new Multitask Unified Model (MUM).

Multisearch can be very useful for certain searches, especially when looking for something very specific, hard to describe, or when you have no idea what a particular item is called. Prior to this, snapping or uploading a photo to Google Lens would only provide you with visual matches, along with links to the stores or web pages that host them. With this new functionality, the tool now lets you add in keywords to help narrow down on your search, or find possible variations of a subject (ie: a particular dress in a different colour).

However, it may be a while until we could actually try out the feature for ourselves. Google app’s new multisearch function is currently in beta, and is only available to US users for now. Unfortunately, the company has not revealed any plans to release it for other regions just yet.

(Source: Google [official blog])

The post Google Combines Text And Image Searches Via New Multisearch Feature appeared first on Lowyat.NET.